Laborers Who Keep Beheadings Out of Your Facebook Feed — Best Business Writing 2015

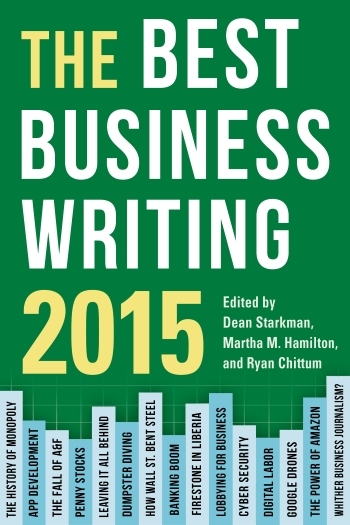

Recent terrorist incidents have focused attention on the role of social media in recruiting members. In his piece, “The Laborers Who Keep Dick Pics and Beheadings Out of Your Facebook Feed,” first published in Wired and now included in The Best Business Writing 2015, Adrian Chen takes a closer look at the process of removing objectionable images from social media sites and the toll it takes on moderators. The following is an excerpt from the article:

The campuses of the tech industry are famous for their lavish cafeterias, cushy shuttles, and on-site laundry services. But on a muggy February afternoon, some of these companies’ most important work is being done 7,000 miles away, on the second floor of a former elementary school at the end of a row of auto mechanics’ stalls in Bacoor, a gritty Filipino town thirteen miles southwest of Manila. When I climb the building’s narrow stairwell, I need to press against the wall to slide by workers heading down for a smoke break. Up one flight, a drowsy security guard staffs what passes for a front desk: a wooden table in a dark hallway overflowing with file folders.

Past the guard, in a large room packed with workers manning PCs on long tables, I meet Michael Baybayan, an enthusiastic twenty-one-year-old with a jaunty pouf of reddish-brown hair. If the space does not resemble a typical startup’s offi ce, the image on Baybayan’s screen does not resemble typical startup work: It appears to show a super-close-up photo of a two-pronged dildo wedged in a vagina. I say appears because I can barely begin to make sense of the image, a baseball-card-sized abstraction of flesh and translucent pink plastic, before he disappears it with a casual flick of his mouse.

Baybayan is part of a massive labor force that handles “content moderation”—the removal of offensive material—for U.S. social-networking sites. As social media connects more people more intimately than ever before, companies have been confronted with the Grandma Problem: Now that grandparents routinely use services like Facebook to connect with their kids and grandkids, they are potentially exposed to the Internet’s panoply of jerks, racists, creeps, criminals, and bullies. They won’t continue to log on if they find their family photos sandwiched between a gruesome Russian highway accident and a hardcore porn video. Social media’s growth into a multi-billion-dollar industry and its lasting mainstream appeal have depended in large part on companies’ ability to police the borders of their user-generated content—to ensure that Grandma never has to see images like the one Baybayan just nuked.

So companies like Facebook and Twitter rely on an army of workers employed to soak up the worst of humanity in order to protect the rest of us. And there are legions of them—a vast, invisible pool of human labor. Hemanshu Nigam, the former chief security officer of MySpace who now runs online-safety consultancy SSP Blue, estimates that the number of content moderators scrubbing the world’s social media sites, mobile apps, and cloud storage services runs to “well over 100,000”—that is, about twice the total head count of Google and nearly fourteen times that of Facebook.

This work is increasingly done in the Philippines. A former U.S. colony, the Philippines has maintained close cultural ties to the United States, which content moderation companies say helps Filipinos determine what Americans find offensive. And moderators in the Philippines can be hired for a fraction of American wages. Ryan Cardeno, a former contractor for Microsoft in the Philippines, told me that he made $500 per month by the end of his three-and-a-half-year tenure with outsourcing firm Sykes. Last year, Cardeno was offered $312 per month by another fi rm to moderate content for Facebook, paltry even by industry standards.

Here in the former elementary school, Baybayan and his coworkers are screening content for Whisper, an LA-based mobile startup—recently valued at $200 million by its VCs—that lets users post photos and share secrets anonymously. They work for a U.S.-based outsourcing firm called TaskUs. It’s something of a surprise that Whisper would let a reporter in to see this process. When I asked Microsoft , Google, and Facebook for information about how they moderate their services, they offered vague statements about protecting users but declined to discuss specifics. Many tech companies make their moderators sign strict nondisclosure agreements, barring them from talking even to other employees of the same outsourcing firm about their work.

“I think if there’s not an explicit campaign to hide it, there’s certainly a tacit one,” says Sarah Roberts, a media studies scholar at the University of Western Ontario and one of the few academics who study commercial content moderation. Companies would prefer not to acknowledge the hands-on effort required to curate our social-media experiences, Roberts says. “It goes to our misunderstandings about the Internet and our view of technology as being somehow magically not human.”

I was given a look at the Whisper moderation process because Michael Heyward, Whisper’s CEO, sees moderation as an integral feature and a key selling point of his app. Whisper practices “active moderation,” an especially labor-intensive process in which every single post is screened in real time; many other companies moderate content only if it’s been flagged as objectionable by users, which is known as reactive moderating. “The type of space we’re trying to create with anonymity is one where we’re asking users to put themselves out there and feel vulnerable,” he tells me. “Once the toothpaste is out of the tube, it’s tough to put it back in.”

Watching Baybayan’s work makes terrifyingly clear the amount of labor that goes into keeping Whisper’s toothpaste in the tube. (After my visit, Baybayan left his job and the Bacoor office of TaskUs was raided by the Philippine version of the FBI for allegedly using pirated software on its computers. The company has since moved its content-moderation operations to a new facility in Manila.) He begins with a grid of posts, each of which is a rectangular photo, many with bold text overlays—the same rough format as old-school Internet memes. In its freewheeling anonymity, Whisper functions for its users as a sort of externalized id, an outlet for confessions, rants, and secret desires that might be too sensitive (or too boring) for Facebook or Twitter. Moderators here view a raw feed of Whisper posts in real time. Shorn from context, the posts read like the collected tics of a Tourette’s sufferer. Any bisexual women in NYC wanna chat? Or: I hate Irish accents! Or: I fucked my stepdad then blackmailed him into buying me a car.

A list of categories, scrawled on a whiteboard, reminds the workers of what they’re hunting for: pornography, gore, minors, sexual solicitation, sexual body parts/images, racism. When Baybayan sees a potential violation, he drills in on it to confirm, then sends it away—erasing it from the user’s account and the service altogether—and moves back to the grid. Within twenty-five minutes, Baybayan has eliminated an impressive variety of dick pics, thong shots, exotic objects inserted into bodies, hateful taunts, and requests for oral sex.

More difficult is a post that features a stock image of a man’s chiseled torso, overlaid with the text “I want to have a gay experience, M18 here.” Is this the confession of a hidden desire (allowed) or a hookup request (forbidden)? Baybayan—who, like most employees of TaskUs, has a college degree—spoke thoughtfully about how to judge this distinction. “What is the intention?” Baybayan says. “You have to determine the difference between thought and solicitation.” He has only a few seconds to decide. New posts are appearing constantly at the top of the screen, pushing the others down. He judges the post to be sexual solicitation and deletes it; somewhere, a horny teen’s hopes are dashed. Baybayan scrolls back to the top of the screen and begins scanning again.

Eight years after the fact, Jake Swearingen can still recall the video that made him quit. He was twenty-four years old and between jobs in the Bay Area when he got a gig as a moderator for a then-new startup called VideoEgg. Three days in, a video of an apparent beheading came across his queue.

“Oh fuck! I’ve got a beheading!” he blurted out. A slightly older colleague in a black hoodie casually turned around in his chair. “Oh,” he said, “which one?” At that moment Swearingen decided he did not want to become a connoisseur of beheading videos. “I didn’t want to look back and say I became so blasé to watching people have these really horrible things happen to them that I’m ironic or jokey about it,” says Swearingen, now the social-media editor at Atlantic Media.

While a large amount of content moderation takes place overseas, much is still done in the United States, often by young college graduates like Swearingen was. Many companies employ a two-tiered moderation system, where the most basic moderation is outsourced abroad while more complex screening, which requires greater cultural familiarity, is done domestically. U.S.based moderators are much better compensated than their overseas counterparts: A brand-new American moderator for a large tech company in the United States can make more in an hour than a veteran Filipino moderator makes in a day. But then a career in the outsourcing industry is something many young Filipinos aspire to, whereas American moderators often fall into the job as a last resort, and burnout is common.

“Everybody hits the wall, generally between three and five months,” says a former YouTube content moderator I’ll call Rob. “You just think, ‘Holy shit, what am I spending my day doing? This is awful.’ ”

Rob became a content moderator in 2010. He’d graduated from college and followed his girlfriend to the Bay Area, where he found his history degree had approximately the same effect on employers as a face tattoo. Months went by, and Rob grew increasingly desperate. Then came the cold call from CDI, a contracting firm. The recruiter wanted him to interview for a position with Google, moderating videos on YouTube. Google! Sure, he would just be a contractor, but he was told there was a chance of turning the job into a real career there. The pay, at roughly $20 an hour, was far superior to a fast-food salary. He interviewed and was given a one-year contract. “I was pretty stoked,” Rob said. “It paid well, and I figured YouTube would look good on a résumé.”

For the first few months, Rob didn’t mind his job moderating videos at YouTube’s headquarters in San Bruno. His coworkers were mostly new graduates like himself, many of them liberal arts majors just happy to have found employment that didn’t require a hairnet. His supervisor was great, and there were even a few perks, like free lunch at the cafeteria. During his eight-hour shifts, Rob sat at a desk in YouTube’s open office with two monitors. On one he flicked through batches of ten videos at a time. On the other monitor, he could do whatever he wanted. He watched the entire Battlestar Galactica series with one eye while nuking torture videos and hate speech with the other. He also got a fascinating glimpse into the inner workings of YouTube. For instance, in late 2010, Google’s legal team gave moderators the urgent task of deleting the violent sermons of the American radical Islamist preacher Anwar al-Awlaki after a British woman said she was inspired by them to stab a politician.

But as months dragged on, the rough stuff began to take a toll. The worst was the gore: brutal street fights, animal torture, suicide bombings, decapitations, and horrific traffic accidents. Arab Spring was in full swing, and activists were using YouTube to show the world the government crackdowns that resulted. Moderators were instructed to leave such “newsworthy” videos up with a warning, even if they violated the content guidelines. But the close-ups of protesters’ corpses and street battles were tough for Rob and his coworkers to handle. So were the videos that documented misery just for the sick thrill of it.

“If someone was uploading animal abuse, a lot of the time it was the person who did it. He was proud of that,” Rob says. “And seeing it from the eyes of someone who was proud to do the fucked-up thing, rather than news reporting on the fucked-up thing—it just hurts you so much harder, for some reason. It just gives you a much darker view of humanity.”

Rob began to dwell on the videos outside of work. He became withdrawn and testy. YouTube employs counselors whom moderators can theoretically talk to, but Rob had no idea how to access them. He didn’t know anyone who had. Instead, he self-medicated. He began drinking more and gained weight.

It became clear to Rob that he would likely never become a real Google employee. A few months into his contract, he applied for a job with Google but says he was turned down for an interview because his GPA didn’t meet the requirement. (Google denies that GPA alone would be a deciding factor in its hiring.) Even if it had, Rob says, he’s heard of only a few contractors who ended up with staff positions at Google.

A couple of months before the end of his contract, he found another job and quit. When Rob’s last shift ended at seven p.m., he left feeling elated. He jumped into his car, drove to his parents’ house in Orange County, and slept for three days straight.